Last month, I read in a local paper: “Roughly three million Filipinos, or 2.6 percent of the national population, may have already been infected with the coronavirus disease 2019 (COVID-19) from April to June …”

The article went on to say:

- Cruz’s paper, titled “An Empirical Argument for Mass Testing: Crude Estimates of Unreported COVID19 Cases in the Philippines vis-à-vis Others in the ASEAN-5,” said statistical analysis showed that “49 out of every 50 COVID-19-positive persons in the Philippines have gone undetected during the second quarter of this year.” Based on the author’s computation, the Philippines may have had 2,812,891 total cases from April to June, instead of the 34,354 official tally for that period. This means only 1.22 percent of cases were detected at the time.

More than the numbers, what bothers me about this report is the news saying the conclusions come from a statistical analysis, but with no explanation to the audience of uncertainties attached. Out of curiosity, my father asked me how they got the estimate. Maybe the right question should have been, “How much error is lurking behind the estimate?” In my experience as a young statistician, computation is easy – but the harder job of quantifying error around an estimate and communicating that to stakeholders is crucial because there are many things that come into play.

In this blog post, I do not intend to prove or disprove whether we have millions of undetected cases, nor to undermine any effort in estimating the total cases – I only want to show a statistical point of view of looking at the data, and errors, we have.

Data we have

Since May this year, our country has conducted symptoms-based tests and expanded targeted testing for “contact tracing, individuals arriving from overseas and those who tested positive in rapid antibody test” according to our President’s spokesperson Harry Roque (cited by Esguerra, 2020). But how about those who are true positive and test negative in a rapid antibody test? How about those who are asymptomatic? At quick glance, we can say that solely basing our estimates and predictions on reported rates will lead to bias in estimating the actual number of cases in the country.

Uncertainties and design

As a statistician, the first thing that comes into my mind when population data are impossible to gather (which is most of the time!) – is taking advantage of the benefits of probabilistic sampling. Well planned sampling can be the foundation that allows us to trust estimates and conclusions. As its name suggests, probability sampling incorporates a known probability mechanism in the random selection of units while non-probability sampling takes units not at random (e.g., by convenience, haphazardly, etc.). The edge of using probability sampling is gaining the ability to characterize uncertainties of estimates through accuracy and precision.

All estimates have uncertainties, even the measurements going into estimates have uncertainties. Uncertainty, from different sources, is inevitable in all branches of science. And for me, the reason why Statistics can be found in nearly all fields is because the field offers ways to quantify certain types of uncertainties.

There are many types of uncertainties; common ones in Statistics are sampling error, measurement error, and coverage error. The most popular is sampling error which attempts to capture how much an estimate might change had different individuals been randomly selected for the sample — its magnitude is often reflected by a margin of error.

In the process of gathering responses, our measurements are really not that exact – whether it be with the use of laboratory scales or survey questionnaires, there is always measurement error. This type of error attempts to capture the accuracy and precision of the measurement instrument (including human errors in using the instrument!).

Coverage error is the failure of a sampling design to actually target the population that is really of interest – it can be under-coverage or over-coverage. It usually happens in the absence of master list of the population or lack of essential information about the population.

Statistics can measure sampling error while also attempting to minimize the other sources of error through an effective sampling design. This is why it is ideal if statisticians are involved from the beginning of a study. Statistics is not all about calculation after data – the design is even more important.

Assessing a country’s health status

Applying this to the issue of assessing the health status of the country in these times of COVID-19, the World Health Organization (WHO) released an investigation protocols for country use and adaptation. It is a good thing that WHO recognizes the importance of promoting sampling to support the process of generalizing to a large population in monitoring COVID-19 situations.

The Philippine’s current approach of restricted sampling cannot escape the bias of over-coverage of those individuals who are already experiencing symptoms and under-coverage of individuals who are not showing any symptoms. Some countries have already started planning probability sampling and even shared their research design ideas through webinars. As of this writing, Philippines is not yet implementing a probability-based sampling design to support future statistics, but I feel it is just around the corner and needs some drive. I think we can redefine “mass testing” by putting effort into designing sampling plans that balance our resources with sources of errors.

However, even if countries can adopt well-designed sampling programs to attempt to estimate the number of infected individuals, there are serious limitations we will need to take into consideration. For one, a major threat to estimation is measurement error associated with antibody tests. In public health, false positives and false negatives are measurement errors.

Aside from the test accuracy issue, Philippines was also faced with data accuracy issues last April – another source of measurement error. The University of the Philippines (UP) Covid-19 Pandemic Response Team released a policy note last May, stating that “18 cases no longer have data on residence in the April 25 update… One patient who reportedly died on April 24 is no longer dead the following day.” On the other hand, the good result of this policy note is the improvement in data management and data handling by the Department of Health (DOH). The challenge now is to figure out how to minimize these errors. Data management and validation may help, though the recent omission of Date of Report of Removal (DateRepRem) in the publicly available data makes this task even harder for analysts. Dr. Peter Cayton of the UP Covid-19 Pandemic Response Team said that “What the loss of the DateRepRem also implies is the difficulty to track recoveries and deaths to the different geographic areas” (cited by Galarosa & Peniano, 2020). We cannot afford to continue with large measurement errors because even a little amount can contaminate our estimates.

Philippines is an archipelago

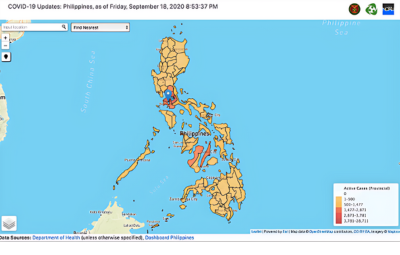

Estimating the total number of cases with just a figure taken at the national level disregards the fact that Philippines is an archipelago. This geographical characteristic of the country itself possesses great variability that affects the spread of the disease. Our Batanes island that is still free of COVID-19, but we have a hotspot region with more than half of our active cases.

Reference: https://endcov.ph/map/

In fact, around 80% of our total cases were from only 20% of our provinces which indicates large dispersion, according to Dr. Jomar Rabajante of University of the Philippines Los Baños (UPLB). This inherent stratification may serve as an additional information that can reduce uncertainties if incorporated into our sampling design. As I mentioned above, Statistics can also reduce uncertainties (aside from just quantifying it) given the appropriate information.

With the data we have right now, we cannot easily provide a more accurate estimate of the actual number of cases, but looking at the big picture through Statistics can give us a clue. To quote Dr. Rabajante, “do not look on just one metric” but instead look on various different metrics that can help us knit together information we extract from the data.

Error and quality are intertwined

All of this boils down to the importance of understanding the data we have – and their errors. Data are the new wealth of the century because of the power they can provide for decision making. A wise country invests in collecting data, and designing how they will be collected, because quality data also provide boundaries of error and uncertainties.

Error is related to quality. That is, reporting uncertainty associated with estimates reveals the quality of those estimates. Sampling designs based on statistical principles allow us to provide estimates with some sources of uncertainty quantified, rather than reporting estimates of uncertain quality. I hope with this blog post, I can raise awareness about the importance of Statistics in addressing challenges in obtaining and using data, and encourage young minds like me to explore more in this field.