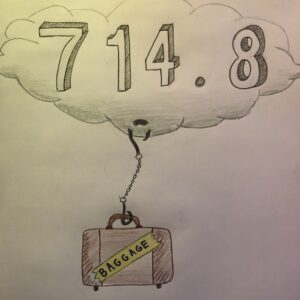

Numbers often get the spotlight in news stories. Sometimes they deserve the front stage as valuable information describing a situation that would be hard, if not impossible, to adequately capture without them. But, they can also easily mislead. All numbers in the news come with baggage. For some numbers, the baggage is out in the open like a carry on, but for many it is of unknown form, size, and weight – and hidden deep in the baggage compartment.

Numbers by themselves, without their baggage, seem to make a story clear, or at least clearer. They suggest credibility and appear as evidence. They provide comfort by conveying something is known about a situation. They invite us to trust the report. But their ability to provide clarity or trustworthiness to a story depend on how weighed down they are with hidden baggage.

I started writing this a few days ago to reflect on our complicated relationship with numbers, especially in the news. I opened up the day’s The New York Times to find a few stories for examples.

This morning (April 11, 2021), one of the headlines was Young Migrants Crowd Shelters Posing Test for Biden, followed by the lead sentence: “The administration is under intensifying pressure to expand its capacity to care for as many as 35,000 unaccompanied minors, part of a wave of people crossing the border.”

The 35,000, while still not without baggage, falls on the lighter side of the baggage weights. Its luggage is mostly carried on board and displayed out in the open. The number is presented with “as many as” wording and honestly rounded, both of which imply uncertainty – as opposed to a statement like “35,102 unaccompanied minors will need care.” The 35,000 number is not without error, but it’s clearly not intended to be taken as an accurate count. Instead, it is meant to provide valuable information about the situation – to allow us to gauge the extent of the problem – in a way that descriptions based on words could not. What words get us close to the information contained in the number 35,000? “A lot?” “A huge number of ?” The context is vague until the number is there to provide a tangible reference point.

Numbers like the 35,000 have an important role to play in the news and often come as part of “official statistics” provided by the government of a country. But even “official statistics” have hidden baggage depending on methods of data collection and analysis, and also depending on the government or administration overseeing the gathering or reporting of such information. For example, another headline in today’s paper is ‘You can’t trust anyone’: Russia’s Hidden Covid Toll is an Open Secret followed by the lead sentence: “The country’s official coronavirus death toll is 102,649. But at least 300,000 more people died last year during the pandemic than were reported in Russia’s most widely cited official statistics.”

The contrast in reported precision between the number 102,649 and the number 300,000 is striking. It provides a nice example of how reporting a number at an increased level of precision (to the ones place in this case) can make it seem trustworthy – as if it has less baggage. The rounding provided in 35,000 and 300,000 make it clear they are being reported, not as accurate counts, but to provide a useful reference for the size of the problem. If the error could be as big as 300,000, there’s not much point in distinguishing between 102,649 and and 102,650 deaths. But to be fair, the 102,649 could indeed be an accurate count of some subset of the total deaths – but that potential baggage is stowed well out of view in this case.

We find ourselves wanting to know how much to trust a reported number, and to understand why it is being presented to us in the first place. Is it sensationalism? Is it to provide a useful reference? To establish context? To bolster one side of an argument? In order to judge such things, we have to consider its potential baggage – that which is visible and that which is hidden from view (whether on purpose or not). Political baggage, while often hard to gauge the extent of, is a type of baggage people understand, and unfortunately even expect under some governments. But some baggage is complicated, hidden inadvertently, or hard to gain access to – like it’s in customs and only people with particular credentials can get access to examine all of it. For example, when the numbers reported are estimates developed to help us generalize conclusions beyond the limits of data collected — often with the help of statistical reasoning and methods — baggage does get tricky.

Before looking at another example, it’s worth taking a quick step back to reflect on our relationship with numbers in school. It’s not often (or at least it didn’t used to be) that education centered on numbers involved talking about their baggage. Instead, most of us were taught to accept (or automatically state) the assumptions and conditions – and to calculate a right answer based on those conditions. In Mathematics classes, numbers often played a key role as being right or wrong. There was no expectation to carefully inspect the baggage. Even in Statistics classes, the numbers turned in on an assignment or test were probably judged as right or wrong. So, when faced with a news story focused on a number, it can be hard to let go of the “right or wrong” interpretation to instead grab onto the more difficult question of “what baggage does this number carry with it?” In real life, it’s pretty safe to say the number is “wrong” – to some degree that depends on its baggage.

In a quick scan of The New York Times on April 9, 2021 (the day I started writing this), I found this article about trying to understand potential learning losses associated with the pandemic. The article states: “A preliminary national study of 98,000 students from Policy Analysis for California Education, an independent group with ties to several large universities, found that as of late fall, second graders were 26 percent behind where they would have been, absent the pandemic, in their ability to read aloud accurately and quickly. Third graders were 33 percent behind.”

This paragraph taken by itself, offers the rather alarming numbers of 26% and 33% behind! The journalist could have easily focused only on these and created a fairly dramatic story eliciting fear among parents, educators, and others. No statistics-based measures of uncertainty are provided, and there is no talk of baggage. The reader is implicitly asked to trust the numbers by reference to “98,000 students” and “independent group with ties to several large universities.” By itself, the summary does little to acknowledge the baggage being dragged along by the numbers.

However, the article then goes on to say “At least one large study found no decline in fall reading performance, and only modest losses in math.” This follow-up description of a different conclusion from another large study is all that’s needed to make it clear that the first comes with baggage, as well as the second. Presentation of differing conclusions is incredibly valuable. And, it may be that both are completely reasonable summaries of their respective situations – given the different baggage they carry. In other words, one need not be right and the other wrong. There are many questions to ask and there is no simple answer to the question of how much, if any, decline there has been in student learning – it will depend on the individuals included, how things are measured, what criteria are used, etc. So much baggage.

Then, the article does start rummaging through parts of the baggage as it goes on to say “But testing experts caution that the true impact of the pandemic on learning could be greater than is currently visible. Many of the students most at risk academically are missing from research because they are participating irregularly in online learning, have not been tested or have dropped off public school enrollment rolls altogether. In addition, some students have been tested at home, where they could have had assistance from adults.” The “testing experts” could go on. And, we could add things like why “percent behind” is incredibly hard to measure and why summarizing it in terms of a single number (per subject area and grade) aggregated over many schools, or even states, may be meaningless under the weight of all the baggage.

Number baggage takes many forms and can range from small carry-ons, to fancy suitcases, to old style trunks, to shipping containers, or some combination. While we rarely get a chance to inspect what’s really in the baggage, or even see the extent of the collection, we need to know it’s there and talk openly about it. Hopefully, journalists and others take this into account when they report numbers.

I think the examples included in this post illustrate journalists choosing to acknowledge number baggage, even if they couldn’t go into messy details in a brief news article. The article provides several numbers as a focus, but it does not overly emphasize the most dramatic numbers. It provides enough context to openly acknowledge the existence of baggage attached to the numbers.

It is not realistic for a journalist to inspect and/or present a long list of the pieces of baggage and contents – even with unlimited space it would be a challenge – but claiming the existence of baggage is a huge first step to conveying information more openly and honestly, and avoiding sensationalism. Baggage does not keep the numbers from being useful, but it does mean we should not be fooled into trusting them as if they are the answer to one of our elementary school math problems. Ignoring or forgetting about baggage makes number-based disinformation too easy.