To be conservative in one’s assumptions is a much-celebrated virtue in science, but the term carries an ambiguity that deserves highlighting.

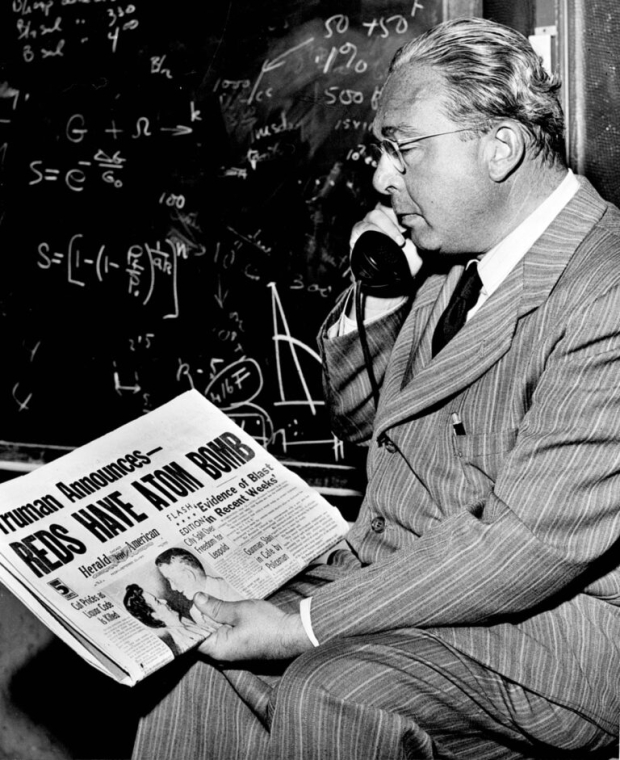

In 1939, at Columbia University in New York and just six years after he had come up with the crucial idea of a neutron-induced nuclear chain reaction, Hungarian-born phycisist Leo Szilard worked with his colleague Enrico Fermi on trying to make the chain reaction happen, to enable harvesting the energy contained in the nucleus, possibly leading to the creation of the atomic bomb. Szilard later reflected on his disagreement with Fermi over how to think about the possible outcomes of their work:

Fermi thought that the conservative thing was to play down the possibility that this may happen, and I thought the conservative thing was to assume that it would happen and take all the necessary precautions.

-quote by Szilard found in Byers (2003)

Their disagreement seems not to have been primarily one of substance – they both thought it wide open whether their experiments would succeed or not – but rather of how to think about this uncertainty. It seems they both took asymmetric views on this, but Szilard’s one is easiest to understand: we are already used to living without man-made nuclear chain reactions since forever, so the outcome that is more likely to have unforeseen consequences and therefore more urgent to think through is if we step into the brave new world where such chain reactions exist. As for Fermi, a succinct summary of his position is that he was acting Goofus:

Goofus and Gallant from the October 1980 issue of Highlights for Children magazine with captions added by Scott Alexander.

This image is taken from rationalist blogger Scott Alexander’s excellent blog post A Failure, But Not of Prediction from April this year. Of course, Goofus and Gallant are not just cartoon characters but also cartoonish in the figurative sense of the word, but I still find them useful to represent a central tension in how we think about science and knowledge.

Gallant approximates as best as he can the ideal rational thinker, who uses all the relevant evidence available, combines this with a reasonable prior in order to form the probability distribution P representing his beliefs about the world, and finally proceeds to take those actions that under P produce the best expected results (if this sounds Bayesian, well… yes, it is). Goofus deviates more blatantly from this ideal, but despite coming across as thick in the mid-20th century children’s comic strip, he should not be dismissed or scorned. On the contrary, he deserves acclaim and respect, because he embodies the traditions of Popperian falsificationism and statistical hypothesis testing that have long served science so well. The rigorous approach suggested by these traditions serves as an antidote to various cognitive biases that our brains come equipped with, such as our overly trigger-happy pattern recognition circuits and our tendency to overestimate how much certainty is warranted about our conclusions. And doing things the Goofus way – forming hypotheses, sticking to them until we have incontrovertible evidence of their falsehood, and then forming new and refined hypotheses, and so on – seems to guarantee (provided Einstein was right that the Lord is subtle but not malicious) that we zoom in, slowly and gradually, towards truth.

The problem, however, with the Goofus approach, is that it is often ill-suited for providing guidance and support when urgent decision-making is called for. One reason is its slowness. Another is that for situations encompassing uncertainty, decision makers need probabilities about the true state of the world, something that Goofus scientists typically do not provide. In such situations, we need to switch to thinking more like Gallant. Szilard did this, whereas Fermi’s scientific Goofusness was so deep-seated in him that he couldn’t make the switch.

Similar shortcomings happen all the time, but examples seem to be especially ubiquitous during the ongoing covid-19 crisis. Alexander (in the aforementioned blog post) offers some such examples, but the examples I give here will be taken from how the crisis has been dealt with in my home country Sweden. The Swedish approach has generated much discussion, also internationally. A central aspect I have discussed and criticized extensively on earlier occasions (such as in an op-ed in Dagens Nyheter and a podcast with American economist James Miller) is the role of herd immunity in the strategy employed by the Public Health Agency of Sweden (FHM). Here, however, I will focus on the role of science and the Goofus vs. Gallant dichotomy in FHM’s decisions and recommendations.

Throughout the pandemic, leading spokespersons at the FHM have emphasized their policy of taking only actions that are supported by science (see e.g., here), and even how this distinguishes Sweden from other countries (such as here). Benign as this may sound, it seems to me that it also reflects an undue reliance on Goofus thinking even when a Gallant approach is needed.

A glaring example of this is when FHM’s director general Johan Carlson was asked, in early March, what worst-case scenario they were planing for, in terms of percentage of the Swedish population infected by the virus. Around the same time, the analogous question was put to the corresponding health authorities in our neighboring countries Denmark and Norway, and their answers were 10-15% and 25%, respectively. Carlson, on the other hand, refused to go higher than 0.1-0.15%. He motivated his estimate by pointing to the Hubei region in China, where this was the proportion of the population diagnosed with the infection – a world record at that time. From a Goofus perspective, this is impeccable, because the idea that this world record can be broken is a new one that can be treated as false until incontrovertible evidence proves otherwise, i.e., when the record is broken. On the other hand, from a Gallant perspective, it is of course reckless to take for granted that a pandemic can never grow worse than what we have seen so far.

FHM’s negative attitude to face masks for the general public seems to be another example. In the earliest stages of the crisis, there was probably good reason to avoid recommending face masks for the public, as this could have lead to a shortage of masks in health care where the need is more dire. There is no longer a risk for such shortage, but the FHM persists in their refusal to recommend face masks, pointing to the lack of sufficient scientific evidence for their usefulness. To a Goofus, this makes sense, as the mass of evidence is not entirely unambiguous, and there is no single study with controlled randomized trials and a p-value of 0.00001, backed up with peer review and successful replications, to point at as incontrovertible evidence. But to a Gallant, this is feeble, as most of the available evidence does point towards a significant (in the oomph sense of the term) effect of face masks; see, e.g., the Chu et al survey and meta-analysis in The Lancet. Also of some interest, given the FHM’s repeated assertions that a face mask recommendation might backfire due to a Peltzmann effect causing people wearing masks to behave more riskily in other ways, are the studies by Marchiori and Chen et al, whose results suggest a reverse Peltzmann effect for face masks.

I believe that lives can be saved if we manage to convince key officials at the FHM about the importance in a crisis to think a bit less like a Goofus and a bit more like a Gallant.

So much for covid-19 (for today). I want to end instead with showing how the Goofus vs Gallant dichotomy plays out in another favorite topic of mine: the future of AI (artificial intelligence).

To a naturalist (in the sense antonymous to someone who believes in supernatural phenomena) it is clear that human intelligence is a natural phenomenon, and also (upon some reflection) that the human brain is probably very far from optimal for producing the greatest amount of intelligence per kilogram of matter. If we accept that, then there are arrangements of matter that produce superintelligence, defined as something that vastly outperforms humans across the full range of cognitive capacities that we have.

Whether or not it is within our power to create such arrangements of matter within a century or so is another matter. It would be premature to point to the ongoing AI gold rush, driven by neural network and machine learning techniques, to deduce that such a breakthrough is imminent. On the other hand, we do not know that it is far away, or whether or not we will ever be able to do it. Evidence is fairly scant either way. Still, an increasing number of thinkers have in recent years pointed to the possibility, and observed that the emergence of superintelligence is likely to turn out to be either the best or the worst thing that ever happened in human history. See the excellent books by Nick Bostrom (2014) and Stuart Russell (2019), or the shorter accounts by Häggström (2018) and Kelsey Piper (2019) for more on this, and on the need to succeed in the task that has become known as AI Alignment, which in short can be described as making sure that the first superintelligent AI has goals that are sufficiently aligned with our own to allow for a flourishing human future. If we create superintelligence without having solved AI Alignment… well, philosopher Toby Ord, in his brilliant and authoritative new book The Precipice: Existential Risk and the Future of Humanity, ranks unaligned AI as the greatest existential risk to humanity during the next 100 years, with engineered pandemics in second place, and both climate change and nuclear war surprisingly far behind (despite being, in Ord’s opinion, enormously important problems to solve).

Szilard in 1949. Photo courtesy of Argonne National Laboratory and obtained from Atomic Heritage Foundation website

Still, there are quite a few mainstream AI researchers and other commentators who dismiss Bostrom, Russell and the others as scaremongers talking moonshine (here I’ve borrowed the last two words from physicist Ernest Rutherford’s famous pronouncement in 1933, just the day before Szilard came up with the chain reaction idea, that “anyone who looked for a source of power in the transformation of the atoms was talking moonshine”). To mention just one example, leading AI researcher Andrew Ng proclaimed in 2015: “I don’t work on not turning AI evil today for the same reason I don’t worry about the problem of overpopulation on the planet Mars.”

The reader has surely figured out what I’m getting at here: when one dissects the arguments of Ng and other superintelligence deniers, one rarely finds much beyond the plain assertion that we haven’t (yet) figured out how to build superintelligence, so we needn’t be concerned with it perhaps happening in the future. This line of thought is strikingly similar to Johan Carlson’s aforementioned assertion in March this year that the possibility of the pandemic turning out worse than it already had in the Hubei region is not worth worrying about. It has Goofus written all over it, and is unacceptable to Gallants like Bostrom, Russell and other serious AI futurologists.

But did I not talk, at the start of this blog post, about urgent decision-making-situations being the signal that we need to shift from a Goofus to a Gallant state of mind? Does superintelligent AI really belong in the category? Surely such an AI breakthrough is at least decades away? To this I have three answers. First, as Eliezer Yudkowsky has eloquently argued, the “at least decades” claim is less obviously true than first meets the eye. Second, even if the breakthrough is decades or more away, AI Alignment appears to be a very difficult problem to solve, so continued procrastination may not be a good idea. Third, Popperian falsificationism, which is the main philosophical justification of Goofusian thinking, is very much based on the idea of trial and error. For that to work, we need to get new chances when we err, but in the case of superintelligence, error may well mean that we all die, so no more new chances.

Trial and error doesn’t work in these domains. It needs to be replaced by (or at least suitably combined with) careful foresight. Goofuses are terrible at this, but Gallants can do it.